Nvidia, SK Hynix, Samsung, and Micron are Working On a New SOCAMM Memory Standard For AI-powered PCs

NVIDIA is primarily known for its graphics cards, and just a few weeks ago they launched the RTX 50, of which we have seen very few units and they have been classified as doing a paper launch. Although NVIDIA is famous for its GPUs, it is also responsible for developing new technologies and is now immersed in a project to create a new memory standard.

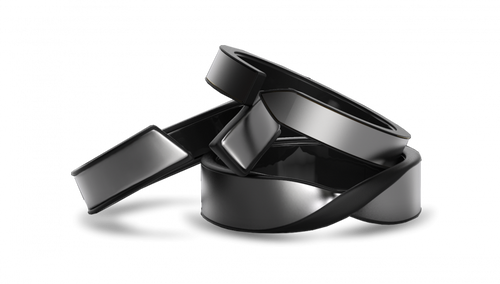

According to SEDaily, NVIDIA is reportedly leading a collaboration between Samsung, Micron, and SK Hynix to create a new standard for AI-focused memory. The latter would be called System On Chip Advanced Memory Module, or more simply, SOCAMM.

Gaming graphics cards and professional or AI graphics cards differ primarily in the amount and type of memory they use. In the case of gaming GPUs, we have already seen that they use GDDR6, GDDR6X, or GDDR7 in the case of NVIDIA's latest RTX 50. However, this changes with graphics cards for professional use and artificial intelligence, since these use high-bandwidth and high-capacity HBM memory. This is how we have seen GPUs that exceed 100GB of memory and this is necessary for applications of this type.

AI GPUs will continue to use HBM3E and HBM4 memory going forward, but that doesn’t mean every device will follow suit. It turns out that NVIDIA wants to make computers like Project DIGITS, which you probably remember as a kind of compact AI supercomputer. It uses a Grace Blackwell GB10 Superchip with a 20-core Arm CPU and 128GB of DDR5X RAM.

With this chip and a Blackwell GPU with 1 PetaFLOP of FP4 for AI, it is possible to run LLMs of up to 200 billion parameters and it makes use of Linux to be able to use all kinds of tools. Inspired by DIGITS, NVIDIA has joined its partners Samsung, SK Hynix, and Micron to create a memory format called SOCAMM (System on Chip Advanced Memory Module). This is an evolution of the CAMM standard only adapted to the NVIDIA chip system and these manufacturers hope to be able to mass manufacture the new memory this year 2025.

SOCAMM has a higher number of I/O ports compared to LPCAMM and DRAM, with 694 ports in total while the other two have 644 and 260, respectively. Another advantage is that SOCAMM would be more cost-effective than conventional DRAM using SO-DIMMs. It is also smaller than traditional DRAM modules and has a removable module to make upgrades easier. NVIDIA's goal with this memory is to enable palm-sized PCs with high AI capabilities.

Considering that artificial intelligence is memory-dependent, this new form factor makes sense as it allows for the use of memory in a smaller, more efficient format. According to Jensen Huang, in the future artists, engineers and anyone involved in AI will need small personal supercomputers and that is where SOCAMM makes sense. We don't know if we will have DIGITS supercomputers in our homes in the future, but the next generation of these are expected to make use of such memory.