Google Loses $100 Billion Due to Its AI Chatbot "Bard"

On Wednesday, Google unveiled a significant overhaul of its widely used search engine currently in development. The company's blog post described it as "an important next step in artificial intelligence." A day earlier, competitor Microsoft had demonstrated to the world how its popular ChatGPT program was integrating into its search engine, Bing. Google, which has been making strides in artificial intelligence for years, could stay caught up.

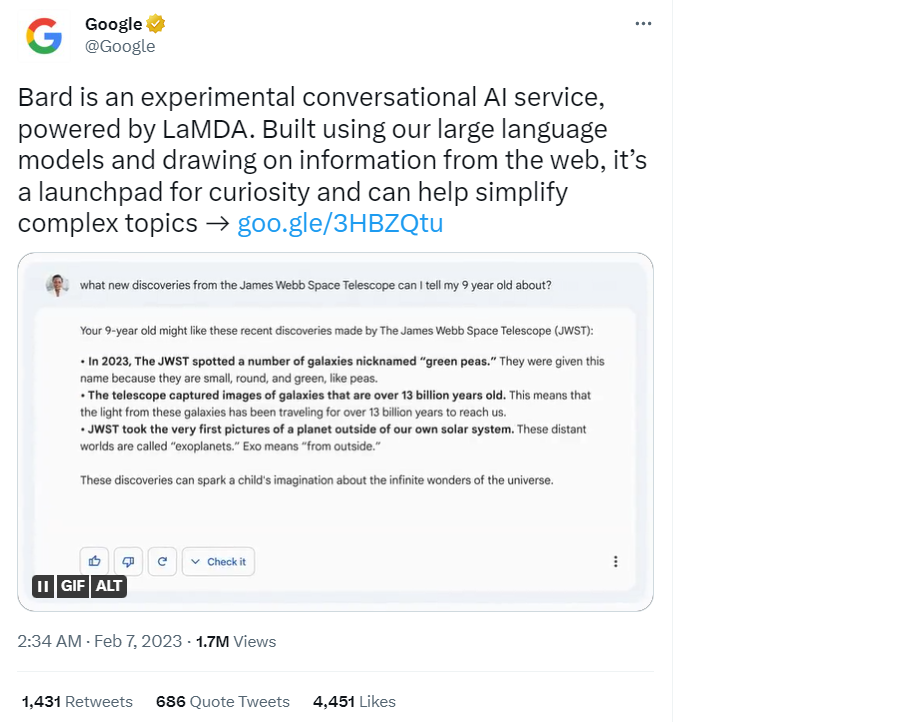

Rapidly, this week Google unveiled its new AI chatbot, Bard, and released a promotional video showcasing it. In the video, Bard was questioned, "What should a 9-year-old child learn about the recent discovery of the James Webb Space Telescope (JWST)?"

The AI system suggests three options, but one is incorrect: the idea that the James Webb telescope would capture the first image of an exoplanet. However, this is incorrect as the first exoplanet picture was taken in 2004, as astrophysicists quickly pointed out on Twitter.

Similar to ChatGPT, Bard tends to fabricate information. To make matters worse, Google made a mistake in its advertising materials, implying that the launch of Bard was hasty and poorly planned. This gives the perception that for the first time in its 25-year history, Google is on the defensive.

The news of Google's failure spread to the American stock exchange, causing parent company Alphabet's fell 7.7% Wednesday, wiping $100 billion off its market value, after the inaccurate response from Bard was first reported by Reuters.

In response, Google issued a statement indicating that they continuously work to enhance the system and meet high-quality standards.

Misguidance or Misinformation

Google's mistake highlighted a significant issue with artificial intelligence: it relies not only on proven facts but also on vast amounts of existing texts that are not necessarily accurate. These texts may contain errors, misguidance, or misinformation, but AI uses them to identify patterns. As a result, AI systems can easily be influenced by inaccurate or false information.

Responses from chatbots like ChatGPT or Bard to questions and tasks can sometimes be inaccurate. The astrophysicist who spotted Google's error commented on Twitter that although these programs are "creepily impressive," they tend to be "wrong with confidence."

What do you think of the Google AI chatbot, Bard? Join our community to leave your comment and don't forget to follow ”Heyup Newsroom” to keep you always aware of the latest News and Updates from the technology world of the future.