Apple wants you to control your iPhone with your mind!

Apple is working on one of its most ambitious accessibility initiatives yet: controlling its devices through brain activity. This is what the Wall Street Journal reveals, highlighting a partnership between Apple and Synchron, a company specializing in brain-computer interfaces. This project could change the daily lives of people with neurodegenerative diseases such as ALS (amyotrophic lateral sclerosis, or Lou Gehrig's disease).

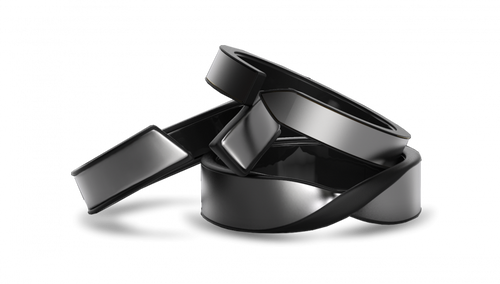

At the heart of this project is the Stentrode, a stent-like device implanted in a vein at the top of the brain's motor cortex. Unlike more invasive approaches, like those from Neuralink, Synchron's implant is inserted via the jugular vein using a minimally invasive endovascular procedure. Once in place, the device uses electrodes to read neural signals and transmit them to digital interfaces.

Since 2019, the Stentrode has been implanted in ten patients in clinical trials, and Apple is now working with Synchron to integrate these signals into iOS, iPadOS, macOS, and visionOS, allowing users to control their devices without any physical movement.

In its article, the WJS highlights the testimony of Mark Jackson, a patient with Lou Gehrig's disease. Thanks to his implant and a Vision Pro, he was able to enjoy an immersive virtual reality experience in the Swiss Alps, even though he is physically unable to leave his home in Pittsburgh.

Today, he is learning to navigate his iPhone and iPad solely by thinking, although the system remains in an experimental stage, without full support for cursor movements. Despite these limitations, the experience represents a major milestone for the future of brain-machine interfaces.

iOS 19: Towards native support for brain-machine interfaces

As part of iOS 19 and visionOS 3, expected this fall, Apple plans to expand support for brain-machine interfaces (BMIs) through a new protocol for switch control commands. This technology will allow users with systems like the Stentrode to navigate Apple interfaces without direct physical interaction, opening the way to practical uses for people with severe paralysis.

Apple is also planning a major update to its Personal Voice feature, which launched with iOS 17. Initially, users had to record 150 phrases to train a personalized synthetic voice, a process that took several hours.

With iOS 19, Apple is reducing this requirement to just 10 phrases, which are processed in less than a minute thanks to optimized algorithms. The voice quality is also more fluid and natural, which will allow people at risk of losing their voice, such as those with ALS, to maintain a vocal connection with their loved ones and their environment.