-

Samsung Unveils 2026 Bespoke AI Steam Robot Vacuum with Double the Power and Smarter Cleaning

Samsung's new 2026 Bespoke AI Steam robot vacuum introduces doubled suction power, superior mobility, and a steam-cleaning station for a revolutionary home cleaning experience.

-

Decoding Apple's 'Magic': The Philosophy Behind a Seamless Ecosystem

Explore how Apple transforms advanced technology into a magical experience by making it non-intrusive, borderless, and universally accessible, allowing technology to fade into the background.

-

G.Skill Reaches $2.4 Million Settlement in DDR4/DDR5 RAM Speed False Advertising Lawsuit

G.Skill will pay $2.4 million to settle a class-action lawsuit over misleading DDR4 and DDR5 RAM speed advertisements, agreeing to update its marketing and packaging.

-

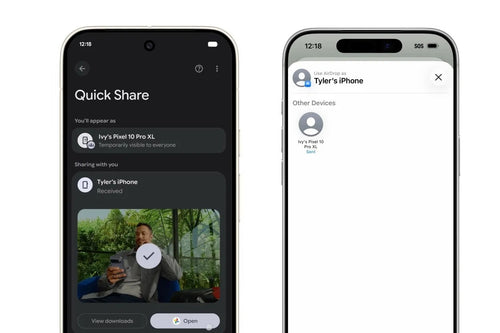

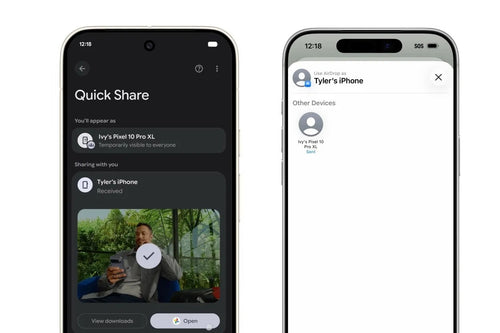

Android Gets Native AirDrop Support: Google Plans to Bridge the Gap with Apple

Google is bringing native AirDrop compatibility to the entire Android ecosystem, revolutionizing file sharing between Android and Apple devices and blurring the lines between the two walled gardens.

-

Apple Kicks Off 2026 Heart Month Challenge on Valentine's Day

This Valentine's Day, Apple Watch users can earn an exclusive badge and stickers by completing the 2026 Heart Month Challenge. Just close your Exercise ring on February 14th.

-

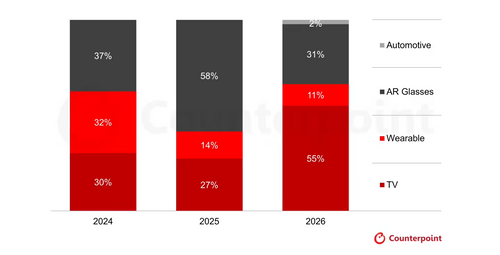

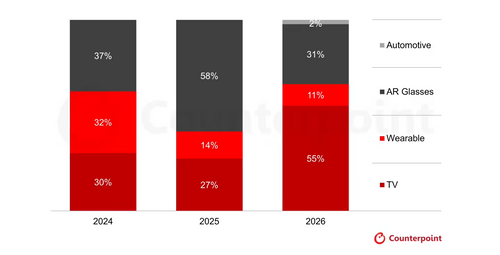

AR Glasses: The Killer App Fueling Micro LED's Explosive Growth

AR smart glasses are set to dominate the Micro LED market, driving massive revenue growth and becoming the technology's first killer application, a new report finds.

-

Sony Japan Announces 'PC READY' DualSense Controller Bundle with USB-C Cable

Sony has announced a new 'PC READY' DualSense controller bundle for the Japanese market, which includes a Midnight Black controller and a complimentary USB-C to USB-C cable.

-

Intel Sunsets Controversial 'Pay-to-Unlock' Hardware Feature

Intel has quietly ended its controversial 'On Demand' pay-to-unlock hardware feature for Xeon processors, a service that faced significant criticism since its inception.

-

Xiaomi Poco X8 Pro Leaks: Certification Reveals Dimensity 8500 Chip and Up to 12GB RAM

The Xiaomi Poco X8 Pro series has appeared in a certification database, hinting at a Dimensity 8500 chip, a 120Hz display, and a starting price of €479.

-

Xiaomi's Magnetic Lens Enters Mass Production: Is the Modular Phone Making a Comeback?

A leak suggests Xiaomi is mass-producing a magnetic lens, potentially reviving modular phone concepts and creating a new paradigm for mobile photography by separating optics from the phone's hardware.

-

AYANEO NEXT 2 Unveiled: A Flagship Windows Handheld with Zen 5 Power and a Massive OLED Display

AYANEO introduces the NEXT 2, a premium Windows handheld with a powerful AMD Zen 5 processor, a large OLED display, and advanced controls for the ultimate portable gaming experience.

-

Next-Gen Xbox: A Windows 11 Powerhouse with Steam Support Aiming for a 2027 Debut

Microsoft's next-gen Xbox is reportedly a Windows 11-powered device with full backward compatibility and support for PC game stores, potentially launching as early as 2027.

-

From Museum Piece to Modern Phone: The Reborn Project Revives the Nokia N8

A dedicated custom ROM project named Reborn is breathing new life into the classic Nokia N8, transforming the Symbian-powered legend from a collector's item into a daily-usable phone.

-

Insta360's New Gimbal Camera to Challenge DJI Pocket with Telephoto Lens and Modular Design

Insta360 is reportedly launching a new handheld gimbal camera to challenge DJI's Pocket series, featuring a revolutionary modular design and a first-ever telephoto lens.

-

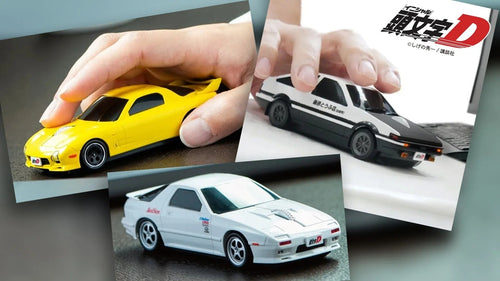

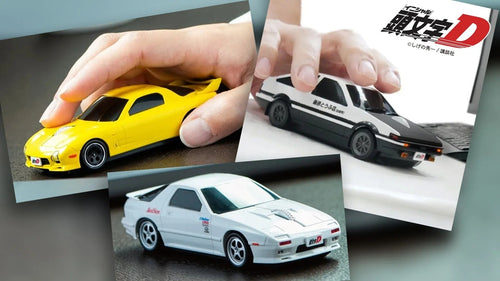

Initial D's Iconic AE86 Drifts Onto Your Desk as a 30th Anniversary Wireless Mouse

Drift into nostalgia with the Initial D 30th Anniversary AE86 wireless mouse, complete with working pop-up headlights and classic Fujiwara Tofu Shop branding.

-

Elon Musk Dispels Rumors: SpaceX Is Not Developing a Smartphone

Elon Musk has officially shut down rumors of a SpaceX-developed smartphone, emphasizing the company's focus on its massive expansion of satellite services and infrastructure.

-

Huawei Expands FreeClip 2 Lineup with Elegant Ice Berry Purple and Rose Gold Options

Huawei has released its FreeClip 2 ear-clip earphones in two stunning new colors, Ice Berry Purple and Rose Gold, blending unique style with powerful audio technology.

-

Galaxy S26 Rumor Roundup: A Revolutionary 'Privacy Display' Steals the Show

The upcoming Samsung S26 series is making waves not with raw specs, but with a revolutionary 'Privacy Display' on the Ultra model, a feature set to redefine on-screen privacy.

-

Sony Alpha 7R VI Rumors: 80MP Stacked Sensor and 16+ Stops of Dynamic Range Leaked

Rumors suggest the upcoming Sony Alpha 7R VI will feature a groundbreaking 80MP stacked sensor, 8K video capabilities, and over 16 stops of dynamic range.

-

Black Shark Unveils Compact Gaming Tablet with Snapdragon 8s Gen 3 and 144Hz Display

Black Shark has launched its new Gaming Tablet, a compact device featuring a Snapdragon 8s Gen 3 processor and a stunning 8.8-inch 2K 144Hz display.

-

EAI Robot: A Bold Leap into AI or a Familiar Tale for Investors?

Faraday Future founder Jia Yueting surprisingly launches three AI robots, raising questions about their originality and purpose amid his ongoing struggles with electric vehicle production.

-

Samsung Galaxy S26 Ultra to Skip Built-in Magnets, Relies on Cases for Magnetic Charging, Reports Say

The upcoming Samsung Galaxy S26 Ultra will reportedly skip integrated magnets, meaning users will need a special magnetic case to use MagSafe-style accessories and Qi2 wireless charging.

-

Lenovo's 2026 ThinkBook 14 & 16 Ryzen Editions Now on Sale with Powerful Specs

Lenovo's new 2026 ThinkBook 14 and 16 Ryzen laptops are now available, featuring the powerful R7 H 260 processor, high-refresh-rate displays, and robust connectivity options.

-

Canon Commemorates 30 Years of PowerShot with a Special Edition G7 X Mark III

Canon unveils a special edition PowerShot G7 X Mark III to celebrate the series' 30th anniversary, featuring a unique design, premium accessories, and a $1,299 price tag.

-

Pixels vs. Purpose: Why the Genie 3 Panic Misses the Point of Great Games

The market panic over Google’s Genie 3 confuses visual mimicry with intentional world-building. While AI can "hallucinate" interactive pixels, it lacks the deterministic logic, physical consistency, and human-crafted "soul" found in masterpieces like Red Dead Redemption 2. Ultimately, AI is a "super-brush" that enhances efficiency, but it cannot replace the creative vision and decades of emotional resonance that define legendary gaming IPs.

-

Google Pixel 10a Officially Debuts Feb 18 with a Sleek, Flush Camera Design

Google's new budget Pixel 10a launches February 18, featuring a sleek, completely flush rear camera system and vibrant new color options like Lavender and Berry.

-

Argon One Up: A Raspberry Pi CM5 Laptop with 14-inch 1200P Display Launches for $400

The Argon One Up, a new DIY laptop kit for the Raspberry Pi CM5, is now available. It features a premium aluminum body, a 14-inch display, and active cooling.

-

Samsung Galaxy S26 Series Teased: AI-Powered Zoom and Enhanced Night Video on the Horizon

Samsung officially teases the Galaxy S26 series, highlighting major upgrades in AI-powered telephoto zoom and night video capabilities ahead of its February 25th launch.

-

Beyond Capacity: Western Digital Unveils Breakthrough HDD Performance Technologies

Western Digital introduces next-generation HDD technologies, promising massive boosts in read/write performance, increased capacity, and improved energy efficiency for future data storage solutions.

-

Xiaomi 17 Series Gets Major Camera Upgrade: LOFIC, Dual-View Video, and More

The Xiaomi 17 series is receiving a significant camera software update, introducing powerful new features like manual LOFIC video mode, dual-view recording, and enhanced professional controls.

-

AMD CEO Confirms Next-Gen Xbox on Track for 2027, New Steam Machine Planned for 2026

AMD CEO Lisa Su confirms the next-gen Xbox is targeting a 2027 launch, while a new AMD-powered Steam Machine is planned for 2026, signaling a clear future roadmap.

-

Alldocube iWork GT Ultra Review: A High-Value Companion for On-the-Go Productivity

Discover the Alldocube iWork GT Ultra, a cost-effective 2-in-1 tablet that balances powerful Intel Core Ultra performance with on-the-go portability for professionals and students.

-

The Rise of External Lenses: Is the iPhone Adopting a Trend from Chinese Smartphones?

From Android flagships to the iPhone, external camera lens kits are reshaping mobile photography. But are they a revolutionary tool or a passing fad? We explore the trend.

-

MANGMI Pocket Max Hands-On: A Deep Dive into the 144Hz Android Gaming Handheld with Modular Controls

A hands-on review of the MANGMI Pocket Max, a new Android handheld boasting a 144Hz AMOLED display, Snapdragon 865, and innovative modular controls for customized gaming.

-

Apple's 2026 Product Blitz: A Foldable iPhone, Redesigned Macs, and a Roadmap for the Next Decade

2026 is shaping up to be a massive year for Apple, with a foldable iPhone, redesigned Macs, and new smart home devices rumored to launch, paving the way for the future.

-

Apple Extends Lifespan of Older Devices with Critical Security Updates

Apple has released vital security updates for older Apple Watch and Mac models, addressing root certificates to maintain core functionality and security for these legacy devices.

-

ASUS ROG and HIFIMAN Launch Kithara: An Audiophile-Grade Planar Magnetic Gaming Headset

ASUS ROG and HIFIMAN have teamed up to release the Kithara, a new open-back planar magnetic gaming headset designed for audiophiles.

-

Ray Tracing on a 1994 Sega Saturn? A Developer Pushes the Limits of Retro Hardware

In a stunning feat of retro engineering, a developer has successfully implemented real-time ray tracing on the 30-year-old Sega Saturn console, far surpassing its original capabilities.

-

Vivo V70 and V70 Elite Teased: Snapdragon 8s Gen 3, Zeiss Triple Cameras, and More

vivo is set to launch its V70 series, featuring the Snapdragon 8s Gen 3, Zeiss cameras, a 120Hz OLED screen, and 90W fast charging for its massive 6500mAh battery.

-

Google Pixel Buds 2a Rumored to Launch in Two Stunning New Colors This Spring

Google's Pixel Buds 2a are reportedly getting a color refresh this spring with two new options: a subtle 'Fog' gray and a vibrant, eye-catching 'Berry' pink.

-

Rogbid Fusion: The Innovative 2-in-1 Wearable That's Both a Smartwatch and a Smart Ring

Rogbid has launched the Fusion, a versatile and affordable 2-in-1 wearable that functions as both a smartwatch and a smart ring, complete with robust health and fitness tracking.

-

Apple's Record Earnings Can't Hide an Inevitable iPhone 18 Price Hike

Apple shatters sales records with the iPhone, but rising component costs mean the upcoming iPhone 18 may come with a higher price tag to protect its legendary profit margins.

-

Realme P4 Power Launches with a Groundbreaking 10,001mAh Battery

Realme has launched the P4 Power in India, its first smartphone with a massive 10,001mAh battery, setting a new standard for endurance in the mid-range market.

-

Samsung Galaxy S26 Series Launch Date Leaked for February 25, Revealing New Design and Features

A new leak points to a February 25th launch for the Samsung Galaxy S26 series, which is expected to feature a major design overhaul and new privacy technology.

-

A New Legend Arrives: Leica Unveils the Noctilux-M 35 f/1.2 ASPH.

Leica introduces the Noctilux-M 35 f/1.2 ASPH., the first 35mm lens in the legendary series, featuring a record-breaking f/1.2 aperture and handcrafted German engineering.

-

Nothing Skips Flagship in 2026, Teases a 'Complete Evolution' with Phone (4a)

Nothing's CEO confirms no new flagship phone this year, with the company instead focusing its efforts on launching a significantly upgraded and redesigned Nothing Phone (4a).

-

End of an Era: Atmosphere Creator SciresM Announces Retirement from the Hacking Scene

SciresM, creator of the widely-used Switch custom firmware Atmosphere, has announced his retirement from the public hacking scene, citing personal reasons and a new chapter in his life.

-

Redmi Turbo 5 Officially Revealed: Dimensity 8500-Ultra, a Massive 7560mAh Battery, and a Launch Tomorrow

Xiaomi officially reveals the Redmi Turbo 5, a new performance-focused phone with a Dimensity 8500-Ultra chip, a massive 7560mAh battery, 100W fast charging, and a premium design.

-

ASUS Unveils the ExpertBook Ultra: A Featherlight Powerhouse with Core Ultra X7 and a 2.8K OLED Display

ASUS has unveiled the ExpertBook Ultra, a new featherlight business laptop packing an Intel Core Ultra X7 processor, 64GB of RAM, and a brilliant 2.8K OLED display.

-

The $30 Kodak Camera That's Selling Out: Nostalgic Genius or a Final Struggle?

Kodak's Charmera keychain camera is a viral sensation despite its poor image quality. This tiny, retro-inspired gadget combines nostalgia with a clever blind-box marketing strategy.

-

Honor WIN Hands-On Review: A 10,000mAh Gaming Beast with a Built-in Fan and 3x Telephoto

The Honor WIN gaming phone pushes boundaries with its massive 10,000mAh battery, active cooling fan, and a versatile 3x telephoto lens, delivering performance beyond expectations.

-

Xiaomi 17 Ultra In-Depth Review: Continuous Optical Zoom + APO Triplet Lens—Has the Phone of the Year Arrived Early?

An in-depth look at the Xiaomi 17 Ultra, featuring a revolutionary continuous optical zoom camera, an APO lens, and exceptional image quality that sets a new industry standard.

-

Honor 500 Pro Review: A Textbook Mid-Range Phone with Exceptional Feel and Battery Life

The Honor 500 Pro raises the bar for mid-range phones with its stunning design, powerful 200MP camera, marathon 8000mAh battery, and flagship-level performance. A true all-rounder.

-

DJI Osmo Action 6 Review: The New Pinnacle of Action Camera Image Quality?

The DJI Osmo Action 6 sets a new standard with its groundbreaking variable aperture, versatile square sensor, and phenomenal battery life, making it the ultimate tool for action videography.

-

Insta360 X4 Air Review: Making 360 Cameras Accessible and Beginner-Friendly

The Insta360 X4 Air balances 8K quality, portability, and an affordable price, making it the perfect beginner-friendly 360 camera for capturing everything.

-

Nothing Ear 3 Review: Where Striking Design Finally Meets Its Sonic Match

The Nothing Ear 3 stands out with its iconic transparent design and finally delivers a balanced, impressive sound quality, making it a rare blend of style and substance.

-

Redmi K90 Pro Max First Look: Is Game-Changing Audio the Key to Success?

The Redmi K90 Pro Max review: A flagship that stands out with its top-tier performance and a revolutionary BOSE-powered 2.1 sound system, carving a new path.

-

iQOO 15 Review: Perfect Gaming Performance, But Photography Has Its Limits

The iQOO 15 delivers flawless gaming performance with its new Snapdragon chip and 2K 144Hz screen, but its camera capabilities reveal some trade-offs for its gaming-centric design.

-

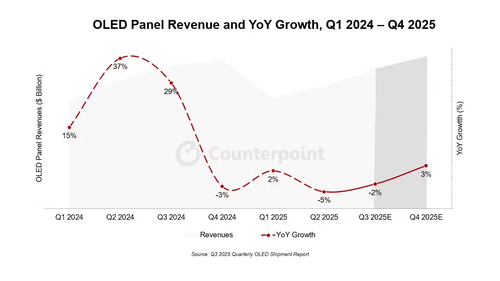

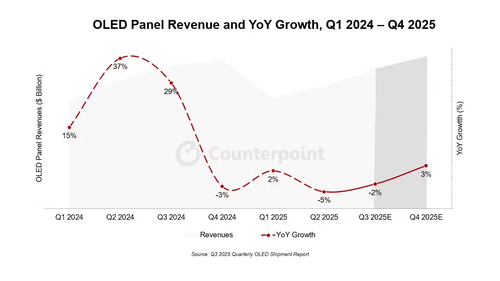

Global OLED Market 2025 Forecast: Samsung Display to Lead with 41% Revenue Share, Says CounterPoint

CounterPoint Research's 2025 OLED market forecast shows Samsung Display leading with 41% revenue share, amidst a market recovery driven by laptops and monitors.

-

The Rise and Swift Fall of the Camera Button: Is Apple's Experiment Over?

Apple's camera button, once hailed as a pro feature and copied by rivals, is rumored to be on its way out. Here’s what went wrong.

-

Report: Nintendo Switch 2 Cartridges to Use 3D NAND for Lower Costs and Higher Capacity

A report from supply chain partner Macronix suggests Nintendo Switch 2 game cartridges will utilize 3D NAND flash, aiming for lower costs and larger storage capacities.

-

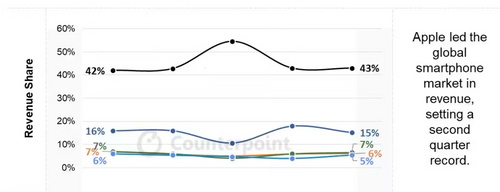

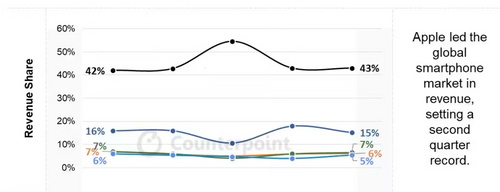

Global Smartphone Market Hits Record $100B in Q2 2025, Apple Dominates Revenue

The global smartphone market reached a record $100 billion in revenue in Q2 2025, with Apple capturing 43% of the total, according to a new Counterpoint Research report.